Teleoperation system from ETH Zürich

Teleoperation means controlling systems or machines from a distance when these machines are, for example, in a dangerous, aggressive environment. Also, many AV (Autonomous Vehicles) companies are considering teleoperation as part of their self-driving car systems.

One of the main challenges for such teleoperation systems is to minimise the G2G (Glass-to-Glass) latency of image transfer from the machine-mounted cameras to the operator’s screen or goggles for comfortable and safe machine control. The operator also often needs additional assistance information (metadata, analytics) to manage the system effectively.

Such challenges were addressed by an innovative team of scientists and engineers from the RSL laboratory at ETH Zürich when customising the Menzi Muck M545 walking excavator for autonomous use cases as well as advanced teleoperation.

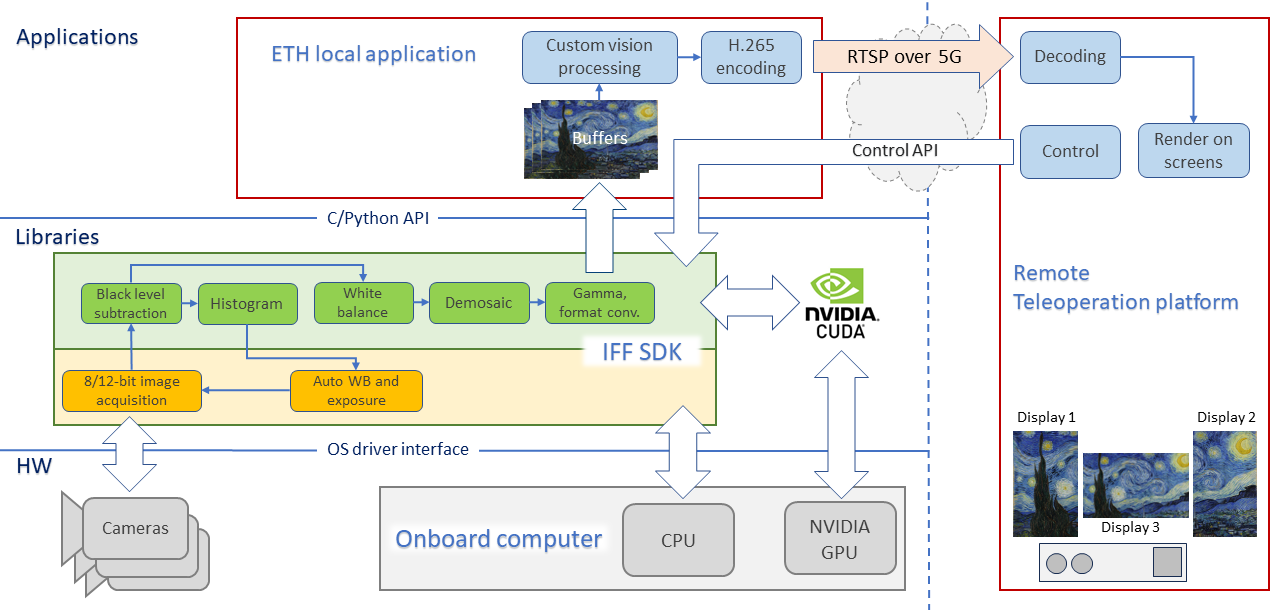

The RSL team equipped the M545 excavator with an advanced industrial computer, machine vision cameras and various other sensors to transmit information from all these sensitive devices to a remote operator using a 5G network. The operator used a special platform that simulated being in the excavator cabin and provided him with all the real-time camera and sensor information, metadata, and analytics he needed.

MRTech has helped researchers at RSL prototype and implement an image processing part of the teleoperation system, and provide IFF SDK for its productization, so let’s look at this in a bit more detail.

The system has four XIMEA industrial cameras mounted on the excavator: 12 MP PCIe front camera, two 3 MP USB3 side view cameras and one 3 MP USB3 arm view camera. All cameras are connected to an industrial PC with a powerful Nvidia RTX A400 GPU.

This computer runs an image preprocessing application based on the IFF SDK, which provides acquisition of raw images from the cameras, then performs demosaicing (converts the Bayer pattern images to the true color images), and transfers images in the required format to a buffer for further specialised processing and subsequent streaming over a 5G network. The application also provides API for remote offline/online configuration and management of cameras.

The following diagram illustrates how the teleoperation system processes camera streams.

It should be clarified here that the component “Custom vision processing” means the joint processing of data from many sensors to integrate all available data to assist the operator. The use of the IFF SDK allowed the RSL team to keep its resources focused on the research tasks as developing methods for multi-modal sensor fusion, high-rate estimation of object positions, and others to build an efficient operator assistance system.

As a result, the RSL team was able to build a teleoperation system with low total G2G latency and move on to the project productization.

ETH Zürich is a public research university in Zürich, Switzerland. Founded by the Swiss federal government in 1854, with the stated mission to educate engineers and scientists; the school focuses primarily on science, technology, engineering, and mathematics.

ETH Zurich is widely recognized for its influence in the progression of advancements in science and technology. In international rankings, it consistently places as one of the best universities worldwide, typically securing a position as one of Europe’s top three universities behind the University of Oxford and the University of Cambridge (more from Wikipedia).

The Robotic Systems Lab investigates the development of machines and their intelligence to operate in rough and challenging environments. With a large focus on robots with arms and legs, their research includes novel actuation methods for advanced dynamic interaction, innovative designs for increased system mobility and versatility, and new control and optimisation algorithms for locomotion and manipulation. In search of clever solutions, we take inspiration from humans and animals to improve the skills and autonomy of complex robotic systems to make them applicable in various real-world scenarios.